![]()

Like it or not, JavaScript has been helping developers power the Internet since 1995. In that time, JavaScript usage has grown from small user experience enhancements to complex full-stack applications using Node.js on the server and one of many frameworks on the client such as Angular, React, or Vue.

Today, building JavaScript applications at scale remains a challenge. More and more teams are turning to TypeScript to supplement their JavaScript projects.

Node.js server applications can benefit from using TypeScript, as well. The goal of this tutorial is to show you how to build a new Node.js application using TypeScript and Express.

The Case for TypeScript

As a web developer, I long ago stopped resisting JavaScript, and have grown to appreciate its flexibility and ubiquity. Language features added to ES2015 and beyond have significantly improved its utility and reduced common frustrations of writing applications.

However, larger JavaScript projects demand tools such as ESLint to catch common mistakes, and greater discipline to saturate the code base with useful tests. As with any software project, a healthy team culture that includes a peer review process can improve quality and guard against issues that can creep into a project.

The primary benefits of using TypeScript are to catch more errors before they go into production and make it easier to work with your code base.

TypeScript is not a different language. It’s a flexible superset of JavaScript with ways to describe optional data types. All “standard” and valid JavaScript is also valid TypeScript. You can dial in as much or little as you desire.

As soon as you add the TypeScript compiler or a TypeScript plugin to your favorite code editor, there are immediate safety and productivity benefits. TypeScript can alert you to misspelled functions and properties, detect passing the wrong types of arguments or the wrong number of arguments to functions, and provide smarter autocomplete suggestions.

Build a Guitar Inventory Application with TypeScript and Node.js

Among guitar players, there’s a joke everyone should understand.

Q: “How many guitars do you need?”

A: “n + 1. Always one more.”

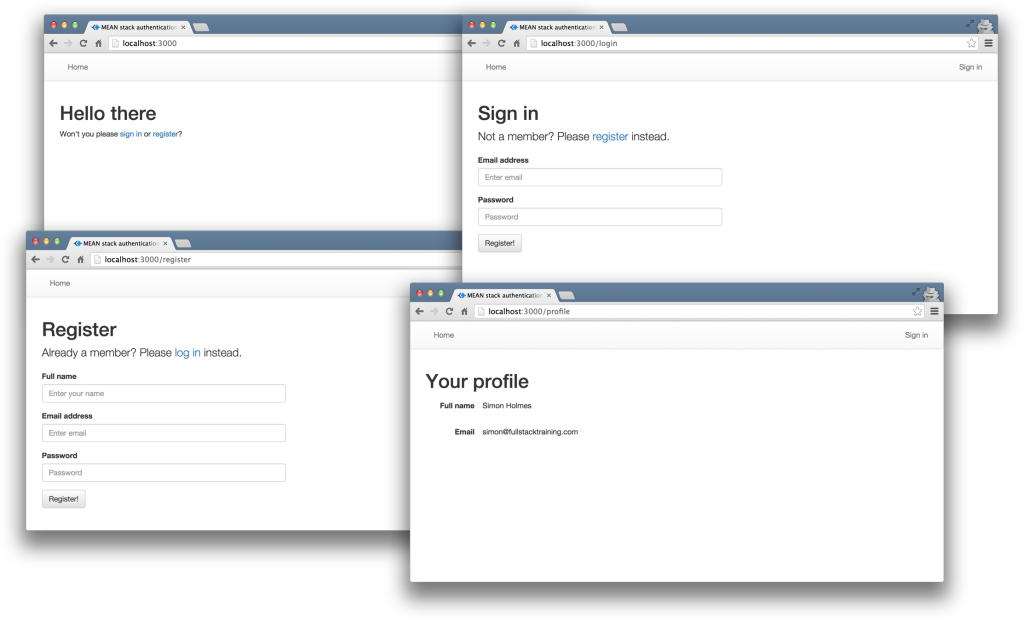

In this tutorial, you are going to create a new Node.js application to keep track of an inventory of guitars. In a nutshell, this tutorial uses Node.js with Express, EJS, and PostgreSQL on the backend, Vue, Materialize, and Axios on the frontend, Okta for account registration and authorization, and TypeScript to govern the JavaScripts!

![Guitar Inventory Demo]()

Create Your Node.js Project

Open up a terminal (Mac/Linux) or a command prompt (Windows) and type the following command:

node --version

If you get an error, or the version of Node.js you have is less than version 8, you’ll need to install Node.js. On Mac or Linux, I recommend you first install nvm and use nvm to install Node.js. On Windows, I recommend you use Chocolatey.

After ensuring you have a recent version of Node.js installed, create a folder for your project.

mkdir guitar-inventory

cd guitar-inventory

Use npm to initialize a package.json file.

npm init -y

Hello, world!

In this sample application, Express is used to serve web pages and implement an API. Dependencies are installed using npm. Add Express to your project with the following command.

npm install express

Next, open the project in your editor of choice.

If you don’t already have a favorite code editor, I use and recommend Visual Studio Code. VS Code has exceptional support for JavaScript and Node.js, such as smart code completing and debugging, and there’s a vast library of free extensions contributed by the community.

Create a folder named src. In this folder, create a file named index.js. Open the file and add the following JavaScript.

const express = require( "express" );

const app = express();

const port = 8080; // default port to listen

// define a route handler for the default home page

app.get( "/", ( req, res ) => {

res.send( "Hello world!" );

} );

// start the Express server

app.listen( port, () => {

console.log( `server started at http://localhost:${ port }` );

} );

Next, update package.json to instruct npm on how to run your application. Change the main property value to point to src/index.js, and add a start script to the scripts object.

"main": "src/index.js",

"scripts": {

"start": "node .",

"test": "echo \"Error: no test specified\" && exit 1"

},

Now, from the terminal or command line, you can launch the application.

npm run start

If all goes well, you should see this message written to the console.

server started at http://localhost:8080

Launch your browser and navigate to http://localhost:8080. You should see the text “Hello world!”

![Hello World]()

Note: To stop the web application, you can go back to the terminal or command prompt and press CTRL+C.

Set Up Your Node.js Project to Use TypeScript

The first step is to add the TypeScript compiler. You can install the compiler as a developer dependency using the --save-dev flag.

npm install --save-dev typescript

The next step is to add a tsconfig.json file. This file instructs TypeScript how to compile (transpile) your TypeScript code into plain JavaScript.

Create a file named tsconfig.json in the root folder of your project, and add the following configuration.

{

"compilerOptions": {

"module": "commonjs",

"esModuleInterop": true,

"target": "es6",

"noImplicitAny": true,

"moduleResolution": "node",

"sourceMap": true,

"outDir": "dist",

"baseUrl": ".",

"paths": {

"*": [

"node_modules/*"

]

}

},

"include": [

"src/**/*"

]

}

Based on this tsconfig.json file, the TypeScript compiler will (attempt to) compile any files ending with .ts it finds in the src folder, and store the results in a folder named dist. Node.js uses the CommonJS module system, so the value for the module setting is commonjs. Also, the target version of JavaScript is ES6 (ES2015), which is compatible with modern versions of Node.js.

It’s also a great idea to add tslint and create a tslint.json file that instructs TypeScript how to lint your code. If you’re not familiar with linting, it is a code analysis tool to alert you to potential problems in your code beyond syntax issues.

Install tslint as a developer dependency.

npm install --save-dev typescript tslint

Next, create a new file in the root folder named tslint.json file and add the following configuration.

{

"defaultSeverity": "error",

"extends": [

"tslint:recommended"

],

"jsRules": {},

"rules": {

"trailing-comma": [ false ]

},

"rulesDirectory": []

}

Next, update your package.json to change main to point to the new dist folder created by the TypeScript compiler. Also, add a couple of scripts to execute TSLint and the TypeScript compiler just before starting the Node.js server.

"main": "dist/index.js",

"scripts": {

"prebuild": "tslint -c tslint.json -p tsconfig.json --fix",

"build": "tsc",

"prestart": "npm run build",

"start": "node .",

"test": "echo \"Error: no test specified\" && exit 1"

},

Finally, change the extension of the src/index.js file from .js to .ts, the TypeScript extension, and run the start script.

npm run start

Note: You can run TSLint and the TypeScript compiler without starting the Node.js server using npm run build.

TypeScript errors

Oh no! Right away, you may see some errors logged to the console like these.

ERROR: /Users/reverentgeek/Projects/guitar-inventory/src/index.ts[12, 5]: Calls to 'console.log' are not allowed.

src/index.ts:1:17 - error TS2580: Cannot find name 'require'. Do you need to install type definitions for node? Try `npm i @types/node`.

1 const express = require( "express" );

~~~~~~~

src/index.ts:6:17 - error TS7006: Parameter 'req' implicitly has an 'any' type.

6 app.get( "/", ( req, res ) => {

~~~

The two most common errors you may see are syntax errors and missing type information. TSLint considers using console.log to be an issue for production code. The best solution is to replace uses of console.log with a logging framework such as winston. For now, add the following comment to src/index.ts to disable the rule.

app.listen( port, () => {

// tslint:disable-next-line:no-console

console.log( `server started at http://localhost:${ port }` );

} );

TypeScript prefers to use the import module syntax over require, so you’ll start by changing the first line in src/index.ts from:

const express = require( "express" );

to:

import express from "express";

Getting the right types

To assist TypeScript developers, library authors and community contributors publish companion libraries called TypeScript declaration files. Declaration files are published to the DefinitelyTyped open source repository, or sometimes found in the original JavaScript library itself.

Update your project so that TypeScript can use the type declarations for Node.js and Express.

npm install --save-dev @types/node @types/express

Next, rerun the start script and verify there are no more errors.

npm run start

Build a Better User Interface with Materialize and EJS

Your Node.js application is off to a great start, but perhaps not the best looking, yet. This step adds Materialize, a modern CSS framework based on Google’s Material Design, and Embedded JavaScript Templates (EJS), an HTML template language for Express. Materialize and EJS are a good foundation for a much better UI.

First, install EJS as a dependency.

npm install ejs

Next, make a new folder under /src named views. In the /src/views folder, create a file named index.ejs. Add the following code to /src/views/index.ejs.

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<title>Guitar Inventory</title>

<meta name="viewport" content="width=device-width, initial-scale=1">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/materialize/1.0.0/css/materialize.min.css">

<link rel="stylesheet" href="https://fonts.googleapis.com/icon?family=Material+Icons">

</head>

<body>

<div class="container">

<h1 class="header">Guitar Inventory</h1>

<a class="btn" href="/guitars"><i class="material-icons right">arrow_forward</i>Get started!</a>

</div>

</body>

</html>

Update /src/index.ts with the following code.

import express from "express";

import path from "path";

const app = express();

const port = 8080; // default port to listen

// Configure Express to use EJS

app.set( "views", path.join( __dirname, "views" ) );

app.set( "view engine", "ejs" );

// define a route handler for the default home page

app.get( "/", ( req, res ) => {

// render the index template

res.render( "index" );

} );

// start the express server

app.listen( port, () => {

// tslint:disable-next-line:no-console

console.log( `server started at http://localhost:${ port }` );

} );

Add an asset build script for Typescript

The TypeScript compiler does the work of generating the JavaScript files and copies them to the dist folder. However, it does not copy the other types of files the project needs to run, such as the EJS view templates. To accomplish this, create a build script that copies all the other files to the dist folder.

Install the needed modules and TypeScript declarations using these commands.

npm install --save-dev ts-node shelljs fs-extra nodemon rimraf npm-run-all

npm install --save-dev @types/fs-extra @types/shelljs

Here is a quick overview of the modules you just installed.

ts-node. Use to run TypeScript files directly.shelljs. Use to execute shell commands such as to copy files and remove directories.fs-extra. A module that extends the Node.js file system (fs) module with features such as reading and writing JSON files.rimraf. Use to recursively remove folders.npm-run-all. Use to execute multiple npm scripts sequentially or in parallel.nodemon. A handy tool for running Node.js in a development environment. Nodemon watches files for changes and automatically restarts the Node.js application when changes are detected. No more stopping and restarting Node.js!

Make a new folder in the root of the project named tools. Create a file in the tools folder named copyAssets.ts. Copy the following code into this file.

import * as shell from "shelljs";

// Copy all the view templates

shell.cp( "-R", "src/views", "dist/" );

Update npm scripts

Update the scripts in package.json to the following code.

"scripts": {

"clean": "rimraf dist/*",

"copy-assets": "ts-node tools/copyAssets",

"lint": "tslint -c tslint.json -p tsconfig.json --fix",

"tsc": "tsc",

"build": "npm-run-all clean lint tsc copy-assets",

"dev:start": "npm-run-all build start",

"dev": "nodemon --watch src -e ts,ejs --exec npm run dev:start",

"start": "node .",

"test": "echo \"Error: no test specified\" && exit 1"

},

Note: If you are not familiar with using npm scripts, they can be very powerful and useful to any Node.js project. Scripts can be chained together in several ways. One way to chain scripts together is to use the pre and post prefixes. For example, if you have one script labeled start and another labeled prestart, executing npm run start at the terminal will first run prestart, and only after it successfully finishes does start run.

Now run the application and navigate to http://localhost:8080.

npm run dev

![Guitar Inventory home page]()

The home page is starting to look better! Of course, the Get Started button leads to a disappointing error message. No worries! The fix for that is coming soon!

A Better Way to Manage Configuration Settings in Node.js

Node.js applications typically use environment variables for configuration. However, managing environment variables can be a chore. A popular module for managing application configuration data is dotenv.

Install dotenv as a project dependency.

npm install dotenv

npm install --save-dev @types/dotenv

Create a file named .env in the root folder of the project, and add the following code.

# Set to production when deploying to production

NODE_ENV=development

# Node.js server configuration

SERVER_PORT=8080

Note: When using a source control system such as git, do not add the .env file to source control. Each environment requires a custom .env file. It is recommended you document the values expected in the .env file in the project README or a separate .env.sample file.

Now, update src/index.ts to use dotenv to configure the application server port value.

import dotenv from "dotenv";

import express from "express";

import path from "path";

// initialize configuration

dotenv.config();

// port is now available to the Node.js runtime

// as if it were an environment variable

const port = process.env.SERVER_PORT;

const app = express();

// Configure Express to use EJS

app.set( "views", path.join( __dirname, "views" ) );

app.set( "view engine", "ejs" );

// define a route handler for the default home page

app.get( "/", ( req, res ) => {

// render the index template

res.render( "index" );

} );

// start the express server

app.listen( port, () => {

// tslint:disable-next-line:no-console

console.log( `server started at http://localhost:${ port }` );

} );

You will use the .env for much more configuration information as the project grows.

Easily Add Authentication to Node and Express

Adding user registration and login (authentication) to any application is not a trivial task. The good news is Okta makes this step very easy. To begin, create a free developer account with Okta. First, navigate to developer.okta.com and click the Create Free Account button, or click the Sign Up button.

![Sign up for free account]()

After creating your account, click the Applications link at the top, and then click Add Application.

![Create application]()

Next, choose a Web Application and click Next.

![Create a web application]()

Enter a name for your application, such as Guitar Inventory. Verify the port number is the same as configured for your local web application. Then, click Done to finish creating the application.

![Application settings]()

Copy and paste the following code into your .env file.

# Okta configuration

OKTA_ORG_URL=https://{yourOktaDomain}

OKTA_CLIENT_ID={yourClientId}

OKTA_CLIENT_SECRET={yourClientSecret}

In the Okta application console, click on your new application’s General tab, and find near the bottom of the page a section titled “Client Credentials.” Copy the Client ID and Client secret values and paste them into your .env file to replace {yourClientId} and {yourClientSecret}, respectively.

![Client credentials]()

Enable self-service registration

One of the great features of Okta is allowing users of your application to sign up for an account. By default, this feature is disabled, but you can easily enable it. First, click on the Users menu and select Registration.

![User registration]()

- Click on the Edit button.

- Change Self-service registration to Enabled.

- Click the Save button at the bottom of the form.

![Enable self-registration]()

Secure your Node.js application

The last step to securing your Node.js application is to configure Express to use the Okta OpenId Connect (OIDC) middleware.

npm install @okta/oidc-middleware express-session

npm install --save-dev @types/express-session

Next, update your .env file to add a HOST_URL and SESSION_SECRET value. You may change the SESSION_SECRET value to any string you wish.

# Node.js server configuration

SERVER_PORT=8080

HOST_URL=http://localhost:8080

SESSION_SECRET=MySuperCoolAndAwesomeSecretForSigningSessionCookies

Create a folder under src named middleware. Add a file to the src/middleware folder named sessionAuth.ts. Add the following code to src/middleware/sessionAuth.ts.

import { ExpressOIDC } from "@okta/oidc-middleware";

import session from "express-session";

export const register = ( app: any ) => {

// Create the OIDC client

const oidc = new ExpressOIDC( {

client_id: process.env.OKTA_CLIENT_ID,

client_secret: process.env.OKTA_CLIENT_SECRET,

issuer: `${ process.env.OKTA_ORG_URL }/oauth2/default`,

redirect_uri: `${ process.env.HOST_URL }/authorization-code/callback`,

scope: "openid profile"

} );

// Configure Express to use authentication sessions

app.use( session( {

resave: true,

saveUninitialized: false,

secret: process.env.SESSION_SECRET

} ) );

// Configure Express to use the OIDC client router

app.use( oidc.router );

// add the OIDC client to the app.locals

app.locals.oidc = oidc;

};

At this point, if you are using a code editor like VS Code, you may see TypeScript complaining about the @okta/oidc-middleware module. At the time of this writing, this module does not yet have an official TypeScript declaration file. For now, create a file in the src folder named global.d.ts and add the following code.

declare module "@okta/oidc-middleware";

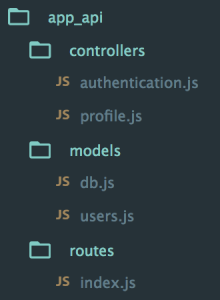

Refactor routes

As the application grows, you will add many more routes. It is a good idea to define all the routes in one area of the project. Make a new folder under src named routes. Add a new file to src/routes named index.ts. Then, add the following code to this new file.

import * as express from "express";

export const register = ( app: express.Application ) => {

const oidc = app.locals.oidc;

// define a route handler for the default home page

app.get( "/", ( req: any, res ) => {

res.render( "index" );

} );

// define a secure route handler for the login page that redirects to /guitars

app.get( "/login", oidc.ensureAuthenticated(), ( req, res ) => {

res.redirect( "/guitars" );

} );

// define a route to handle logout

app.get( "/logout", ( req: any, res ) => {

req.logout();

res.redirect( "/" );

} );

// define a secure route handler for the guitars page

app.get( "/guitars", oidc.ensureAuthenticated(), ( req: any, res ) => {

res.render( "guitars" );

} );

};

Next, update src/index.ts to use the sessionAuth and routes modules you created.

import dotenv from "dotenv";

import express from "express";

import path from "path";

import * as sessionAuth from "./middleware/sessionAuth";

import * as routes from "./routes";

// initialize configuration

dotenv.config();

// port is now available to the Node.js runtime

// as if it were an environment variable

const port = process.env.SERVER_PORT;

const app = express();

// Configure Express to use EJS

app.set( "views", path.join( __dirname, "views" ) );

app.set( "view engine", "ejs" );

// Configure session auth

sessionAuth.register( app );

// Configure routes

routes.register( app );

// start the express server

app.listen( port, () => {

// tslint:disable-next-line:no-console

console.log( `server started at http://localhost:${ port }` );

} );

Next, create a new file for the guitar list view template at src/views/guitars.ejs and enter the following HTML.

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<title>Guitar Inventory</title>

<meta name="viewport" content="width=device-width, initial-scale=1">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/materialize/1.0.0/css/materialize.min.css">

<link rel="stylesheet" href="https://fonts.googleapis.com/icon?family=Material+Icons">

</head>

<body>

<div class="container">

<h1 class="header">Guitar Inventory</h1>

<p>Your future list of guitars!</p>

</div>

</body>

</html>

Finally, run the application.

npm run dev

Note: To verify authentication is working as expected, open a new browser or use a private/incognito browser window.

Click the Get Started button. If everything goes well, log in with your Okta account, and Okta should automatically redirect you back to the “Guitar List” page!

![Okta login]()

With authentication working, you can take advantage of the user profile information returned from Okta. The OIDC middleware automatically attaches a userContext object and an isAuthenticated() function to every request. This userContext has a userinfo property that contains information that looks like the following object.

{

sub: '00abc12defg3hij4k5l6',

name: 'First Last',

locale: 'en-US',

preferred_username: 'account@company.com',

given_name: 'First',

family_name: 'Last',

zoneinfo: 'America/Los_Angeles',

updated_at: 1539283620

}

The first step is get the user profile object and pass it to the views as data. Update the src/routes/index.ts with the following code.

import * as express from "express";

export const register = ( app: express.Application ) => {

const oidc = app.locals.oidc;

// define a route handler for the default home page

app.get( "/", ( req: any, res ) => {

const user = req.userContext ? req.userContext.userinfo : null;

res.render( "index", { isAuthenticated: req.isAuthenticated(), user } );

} );

// define a secure route handler for the login page that redirects to /guitars

app.get( "/login", oidc.ensureAuthenticated(), ( req, res ) => {

res.redirect( "/guitars" );

} );

// define a route to handle logout

app.get( "/logout", ( req: any, res ) => {

req.logout();

res.redirect( "/" );

} );

// define a secure route handler for the guitars page

app.get( "/guitars", oidc.ensureAuthenticated(), ( req: any, res ) => {

const user = req.userContext ? req.userContext.userinfo : null;

res.render( "guitars", { isAuthenticated: req.isAuthenticated(), user } );

} );

};

Make a new folder under src/views named partials. Create a new file in this folder named nav.ejs. Add the following code to src/views/partials/nav.ejs.

<nav>

<div class="nav-wrapper">

<a href="/" class="brand-logo"><% if ( user ) { %><%= user.name %>'s <% } %>Guitar Inventory</a>

<ul id="nav-mobile" class="right hide-on-med-and-down">

<li><a href="/guitars">My Guitars</a></li>

<% if ( isAuthenticated ) { %>

<li><a href="/logout">Logout</a></li>

<% } %>

<% if ( !isAuthenticated ) { %>

<li><a href="/login">Login</a></li>

<% } %>

</ul>

</div>

</nav>

Modify the src/views/index.ejs and src/views/guitars.ejs files. Immediately following the <body> tag, insert the following code.

<body>

<% include partials/nav %>

With these changes in place, your application now has a navigation menu at the top that changes based on the login status of the user.

![Navigation]()

Create an API with Node and PostgreSQL

The next step is to add the API to the Guitar Inventory application. However, before moving on, you need a way to store data.

Create a PostgreSQL database

This tutorial uses PostgreSQL. To make things easier, use Docker to set up an instance of PostgreSQL. If you don’t already have Docker installed, you can follow the install guide.

Once you have Docker installed, run the following command to download the latest PostgreSQL container.

docker pull postgres:latest

Now, run this command to create an instance of a PostgreSQL database server. Feel free to change the administrator password value.

docker run -d --name guitar-db -p 5432:5432 -e 'POSTGRES_PASSWORD=p@ssw0rd42' postgres

Note: If you already have PostgreSQL installed locally, you will need to change the -p parameter to map port 5432 to a different port that does not conflict with your existing instance of PostgreSQL.

Here is a quick explanation of the previous Docker parameters.

-d - This launches the container in daemon mode, so it runs in the background.-name - This gives your Docker container a friendly name, which is useful for stopping and starting containers.-p - This maps the host (your computer) port 5432 to the container’s port 5432. PostgreSQL, by default, listens for connections on TCP port 5432.-e - This sets an environment variable in the container. In this example, the administrator password is p@ssw0rd42. You can change this value to any password you desire.postgres - This final parameter tells Docker to use the postgres image.

Note: If you restart your computer, may need to restart the Docker container. You can do that using the docker start guitar-db command.

Install the PostgreSQL client module and type declarations using the following commands.

npm install pg pg-promise

npm install --save-dev @types/pg

Database configuration settings

Add the following settings to the end of the .env file.

# Postgres configuration

PGHOST=localhost

PGUSER=postgres

PGDATABASE=postgres

PGPASSWORD=p@ssw0rd42

PGPORT=5432

Note: If you changed the database administrator password, be sure to replace the default p@ssw0rd42 with that password in this file.

Add a database build script

You need a build script to initialize the PostgreSQL database. This script should read in a .pgsql file and execute the SQL commands against the local database.

In the tools folder, create two files: initdb.ts and initdb.pgsql. Copy and paste the following code into initdb.ts.

The post How to Use TypeScript to Build a Node API with Express appeared first on SitePoint.

— This is the mini basketball hoop I used. You could use a different one — especially if its net works better!

— This is the mini basketball hoop I used. You could use a different one — especially if its net works better! — This is my favourite display for the Raspberry Pi, it is a touchscreen, so you can interact with the UI without needing to connect up a mouse.

— This is my favourite display for the Raspberry Pi, it is a touchscreen, so you can interact with the UI without needing to connect up a mouse. — To make the display look a bit nicer when all together with the Pi, I have my Pi in this black case.

— To make the display look a bit nicer when all together with the Pi, I have my Pi in this black case. — I had a spare cheap clone HC-SR04 sensor around, but I'd recommend buying a good quality one. My one has occasional odd readings and is a bit temperamental.

— I had a spare cheap clone HC-SR04 sensor around, but I'd recommend buying a good quality one. My one has occasional odd readings and is a bit temperamental. Me testing out my connected scoreboard — with a customised Suns themed interface![/caption]

Me testing out my connected scoreboard — with a customised Suns themed interface![/caption]